LOCATE - Visual Localization in Natural Environments

Martin Čadík

Jan Brejcha, Jan Tomešek, Petr Bobák, Tomáš Polášek, Jiří Hanzlíček, Milan Munzar, David Hříbek

CPhoto@FIT, Brno University of Technology, Czech Republic

cadikm@centrum.cz

Keywords: LOCATE, visual localization, vision based localization, image geolocation, visual odometry, geo-localization, geo-tagging, image to model registration, Terrain-Aided Navigation (TAN), Terrain Referenced Navigation (TRN), vision based navigation, correlating measured terrain with digital terrain model, 3D alignment, re-photography, cross-domain registration, extrinsic calibration, 6 dof, photograph peak tagging, automatic geo-registration, camera pose estimation, place recognition

Abstract

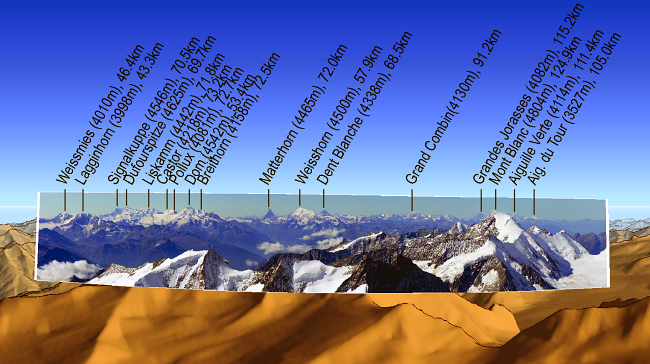

Project LOCATE deals with localization in natural environments. The aim is to accurately find the location and orientation of the camera which captured the image. We introduce a system for automatic alignment of the query photo with a geo-referenced 3D terrain model. We propose a new alignment metric to accurately predict the camera orientation succeeding the large-scale visual localization step. Having a sufficiently accurate match between a photograph and a 3D model offers new possibilities for image enhancement. It can be used to transform photographs into a realistic virtual 3D experience, e.g. to automatically highlight elements in the image, such as the travel path taken, names of mountains, or other landmarks. Furthermore, the synthetic depth map, and/or the whole 3D model of the queried photo, can be used for novel view synthesis, image relighting, dehazing or refocusing.

Resources:

[Press release (Cz)][Press release (En)]

[Press release (De)]

Camera orientation estimation:

[CVPR 2024 Paper][3DV 2018 Oral Paper]

[CVPR 2011 Oral Paper (pdf)]

[CVPR 2011 Supplementary materials (pdf)]

[Video (avi)]

[CVPR 2011 Talk (pdf)]

[JVCIR 2016 Paper (pdf)]

[Comparison of Semantic Segmentation Approaches for Horizon/Sky Line Detection - IJCNN Paper 2017]

[Resource Efficient Mountainous Skyline Extraction using Shallow Learning - IJCNN Paper 2021 ]

Camera elevation estimation:

[BMVC 2015 Paper (pdf)][BMVC 2015 Supplementary materials (project webpage)]

[BMVC 2015 Extended abstract (pdf)]

[BMVC 2015 Poster (pdf)]

Camera pose estimation from lines:

[Camera Pose Estimation from Lines using Direct Linear Transformation - PhD thesis 2017 (pdf)][Absolute Pose Estimation from Line Correspondences using Direct Linear Transformation - CVIU 2017 Paper (pdf)]

[BMVC 2015 Paper (pdf)]

[BMVC 2015 Poster (pdf)]

[BMVC 2015 code (Matlab)]

Visual geo-localization:

[CrossLocate: Cross-modal Large-scale Visual Geo-Localization in Natural Environments using Rendered Modalities]Evaluation of visual geo-localization methods:

[HiVisComp 2015 Poster (pdf)][State-of-the-Art in Visual Geo-localization - Pattern Analysis and Applications (pdf)]

[GeoPose3K: Mountain Landscape Dataset for Camera Pose Estimation in Outdoor Environments - Image and Vision Computing (pdf)]

Computational photography applications:

[LandscapeAR: Large Scale Outdoor Augmented Reality by Matching Photographs with Terrain Models Using Learned Descriptors][Reinforced Labels: Multi-Agent Deep Reinforcement Learning for Point-Feature Label Placement]

[Video Sequence Boundary Labeling with Temporal Coherence]

[ACM UIST Immersive Trip Reports]

[Computational photography - Habilitation Thesis]

[HDR Video Metrics (pdf)]

[Automated Outdoor Depth-Map Generation and Alignment]

Funding:

| Last changes: 04/2019, Martin Čadík. |